AI Safety or Security? What We Lose in Translation

The shift from AI safety to security is subtle but seismic. It changes who gets heard, what gets justified, and how power is exercised.

AI

Laila Shaheen

8/1/20255 min read

Words matter. Words hold power. Words shape discourse.

Between 2023 and 2025, discussions around artificial intelligence underwent a subtle yet profound shift in terminology from AI safety to AI security.

While many may use these words interchangeably, they are in fact fundamentally different. They trigger different emotions, advance different priorities, and serve different agendas.

To call something a matter of security is to securitize it. And to securitize an issue is to take it from the realm of normal politics, where citizens can debate, influence, and hold leaders accountable, to the realm of exceptional, emergency politics, where democratic processes are sidelined in favor of whatever the state deems necessary to defend its national interest.

In this shift, citizens cease to be participants and instead become objects of security. Their fears are weaponized, their access to critical information is curtailed, and their rights are quietly bargained away, all in the name of protection from some elusive threat.

To see this dynamic in action, walk with me down senate hearings lane, where the evolving language between American senators and OpenAI CEO Sam Altman lays it bare.

Sam Altman has appeared twice before congress: once in May 2023 and again in May 2025.

In the 2023 testimony, AI "safety" dominated the discussion, surfacing twenty-nine times as the focal point of concern. Securitized language, by contrast, made only a modest appearance: "security" was mentioned five times, never in the context of national security, and the strategic rival, "China", appeared merely six times.

By the 2025 testimony, however, the vocabulary surrounding AI had, to the skeptical eye, undergone a striking transformation. "Security" now took center stage with thirty-seven mentions, "safety" was demoted to six, and "China" emerged as the leitmotif, invoked forty-two times.

In two short years, discussions of AI, from the public and the private perspectives, shifted from keeping the technology safe, to defending the nation against a national security threat.

The words changed, and with it, the politics.

So why does this matter? Allow me to present three key concerns.

Firstly, when we securitize an issue, the lines between the military and the civic, the domestic and the foreign, begin to dissolve. “National Security” becomes a catch-all justification for state action. A universal alibi, if you will. Once the label is fixed, actions taken to address it no longer need public justification. The term itself becomes the legitimizer, as long as the audience, the object of security, accepts the framing.

Historically, a similar linguistic maneuver emerged during the Cold War. American officials deliberately reframed ‘security’ as national and state security, replacing the previously favored military term, defense.

The move was strategic: the war effort demanded a fusion of military and civilian activities, and blurring those boundaries became essential. Unlike ‘defense,’ which carried a strictly geopolitical and military connotation, ‘security’ could be invoked more broadly to rally the public against threats both foreign and domestic. As this episode demonstrates, the dismantling of such distinctions began with the seemingly benign act of choosing a different word.

Secondly, there is a real tension between security and individual liberty. History shows this pattern well: post‑9/11 policies like the Patriot Act, the COVID‑19 surveillance creep, or the UK’s “Snoopers’ Charter,” all extended state oversigh in the name of security.

These policies, like many others, were born in moments when a threat, or a threatening actor, endangered national security. In exceptional times, exceptional measures are tolerated. And one can understand such tolerance. The trouble begins when the moment passes but the measure remains. What was once temporary and extraordinary quietly becomes ordinary, and societies adjust, almost imperceptibly, their expectations of individual liberties.

Third, some issues genuinely warrant securitization: nuclear weapons, military defense, and border protection- just to name a few. However, the securitization under discussion here is of a different sort-- a preemptive framing of issues that pose no existential risk in the real sense of the word. Where we find ourselves today is in an era of over-securitization. "National Security” is invoked less as a shield against real danger and more as a tool to stoke fear, justify extraordinary measures, and rally public compliance

AI complicates this dynamic further. Unlike nuclear technologies, developed in government labs under strict state oversight, AI is largely controlled by a handful of private companies whose primary allegiance is to shareholders-- not the state, and certainly not its citizens.

Yet these corporations have become key securitizing actors, framing AI as a national security threat to consolidate power, justify reckless innovation, and sharpen their competitive edge against both domestic and foreign rivals.

Consider OpenAI's letter to the Office of Science and Technology Policy. Its opening lines sets the tone:

“As America’s world-leading AI sector approaches artificial general intelligence (AGI), with a Chinese Communist Party (CCP) determined to overtake us by 2030, the Trump Administration’s new AI Action Plan can ensure that American-led AI, built on democratic principles, continues to prevail over CCP-built autocratic, authoritarian AI.”

From there, the letter reads less like a policy recommendation and more like a corporate wish list. OpenAI calls on the governmnet to fast-track facility clearances for frontier AI labs "committed to supporting national security," ease compliance with federal security regulations for AI tools, and accelerate AI testing, deployment, and procurement across federal agencies.

The company also urges the government to tighten export controls on OpenAI’s competitors while promoting the global adoption of "American AI systems", whatever that means, and, predictably, to relax regulations on privacy, data ownership, and intellectual property.

The message is clear: if you fail to loosen regulations and speed approvals, the U.S. will undoubtedly fall behind China, putting our national interests at grave risk.

This kind of rhetoric, so bluntly designed to manipulate public perception and justify letting private AI companies 'move fast and break things' under the threat of the communist boogeyman, is deeply dangerous.

If the bar for what counts as a national security issue continues to drop, our capacity to act as informed, engaged citizens will steadily erode.

So what can we do?

Well, we can start by talking about it. Naming it. Challenging it.

Getting familiar with the ways we are being manipulated, by both state and corporate actors who capitalize on fear, is the first step toward reclaiming our democratic agency.

When we recognize how the language of security is used to shut down debate, limit transparency, and fast-track harmful policies, we can begin to resist it.

Public awareness is the antidote to manufactured urgency.

So the next time you hear the words “national security” uttered in whatever context, but especially around AI, pause and ask: whose security? Against what threat? And at what cost?

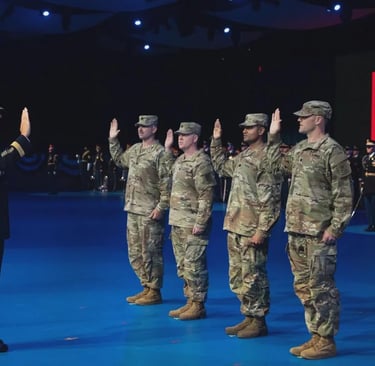

Army Chief of Staff Gen. Randy A. George administers the Oath of Office to four new U.S. Army Lt. Cols. during a Detachment 201: The Army’s Executive Innovation Corps (EIC) commissioning ceremony in Conmy Hall, Joint Base Myer-Henderson Hall, Va., June 13, 2025. The Army’s EIC is an initiative that places top tech executives into uniformed service within the Army Reserve. (U.S. Army photo by Leroy Council)

© 2025. The PoliTech Brief. All rights reserved.